Is RNN Part of Deep Learning? Here’s the Clear Answer

When exploring artificial intelligence architectures, one question frequently arises: where do recurrent neural networks sit within the deep learning landscape? The answer lies in their specialised design for processing sequential information. Unlike standard models that treat inputs as isolated events, these systems retain contextual awareness through internal memory mechanisms.

Developed to handle time-series data or language patterns, such architectures build upon foundational neural networks principles. Their ability to reference previous computations creates dynamic feedback loops – a feature absent in basic feedforward structures. This unique approach allows them to model dependencies across sequences, making them indispensable for tasks like speech recognition or predictive text.

Historical advancements in computational power and algorithmic refinements cemented their position within deep learning frameworks. While newer models have emerged, their core functionality remains vital for temporal data analysis. Modern applications across finance, healthcare, and natural language processing demonstrate their enduring relevance.

This section clarifies their classification while debunking common misconceptions. Subsequent discussions will explore their technical evolution and practical implementations, revealing why they remain a cornerstone of intelligent system design.

Introduction to Recurrent Neural Networks

Modern artificial intelligence thrives on architectures designed for temporal patterns. Among these, recurrent neural networks stand out for their ability to interpret sequences where order determines meaning. Traditional models struggle with time-based data, treating each input as isolated rather than interconnected.

What Defines These Systems?

Unlike standard neural networks, RNNs maintain an internal state that acts as a dynamic memory bank. This mechanism allows them to reference previous inputs when processing new ones, creating context-aware outputs. As one researcher notes: “Their power lies in modelling relationships across time steps – a fundamental shift from static analysis.”

Why Sequences Matter

Consider these scenarios where sequence order is critical:

- Predicting stock prices using historical trends

- Translating sentences while preserving grammar rules

- Detecting heart arrhythmias in ECG readings

Such tasks require systems that understand temporal dependencies – precisely where these architectures excel. By retaining contextual information, they bridge gaps between discrete events, enabling sophisticated pattern recognition in streaming data.

Deep Learning Fundamentals in Modern AI

Contemporary AI systems rely on layered architectures that automatically uncover patterns within complex datasets. These multi-tiered structures form the backbone of intelligent decision-making across industries, from healthcare diagnostics to financial forecasting.

Key Characteristics of Deep Learning

Traditional machine learning methods often require manual feature engineering. In contrast, deep architectures self-discover hierarchical representations through successive processing layers. This automated abstraction enables systems to interpret raw inputs – whether pixel arrays or audio waveforms – without human-guided preprocessing.

Three pillars define this approach:

- End-to-end training that refines feature extraction and task execution simultaneously

- Scalable processing of high-dimensional information like video streams or genomic sequences

- Adaptive learning through gradient-based optimisation techniques

These capabilities emerge from interconnected node layers that progressively transform input data. Lower tiers might detect edges in images, while higher layers recognise complex shapes or objects. Such multi-level analysis proves critical for tasks demanding contextual awareness, like parsing sentence structures or predicting equipment failures.

Successful implementations require substantial computational resources and extensive training datasets. Modern frameworks leverage parallel processing units and advanced regularisation methods to manage these demands effectively. As hardware evolves, these systems continue pushing boundaries in pattern recognition and predictive accuracy.

Exploring is recurrent neural network deep learning

Artificial intelligence’s evolution hinges on specialised architectures tackling distinct data types. Two prominent models – convolutional neural networks (CNNs) and recurrent neural networks – demonstrate how design dictates function. While both belong to deep learning paradigms, their operational philosophies diverge significantly.

Differentiating RNNs from CNNs

CNNs dominate spatial analysis, excelling at interpreting grid-based information like photographs. Their layered filters detect edges, textures, and shapes through hierarchical pattern recognition. RNNs, conversely, specialise in temporal sequences where context determines meaning – think speech rhythms or sentence construction.

| Feature | CNNs | RNNs |

|---|---|---|

| Application Focus | Spatial Patterns | Temporal Sequences |

| Data Type | Static (Images) | Dynamic (Text/Time Series) |

| Key Mechanism | Convolution Filters | Feedback Loops |

| Memory Capacity | None | Internal State Tracking |

Role of Memory in Deep Learning

What sets RNNs apart is their internal memory, enabling systems to reference prior inputs during current computations. This temporal awareness proves vital for tasks requiring contextual continuity, like translating idiomatic phrases or predicting stock trends.

Unlike CNNs’ fixed-depth structures, RNNs achieve complexity through time unfolding – processing sequences stepwise while sharing parameters across iterations. As noted in IBM’s analysis, this approach allows “dynamic temporal behaviour modelling” without exponentially increasing computational demands.

Both architectures exemplify deep learning’s versatility. CNNs decode spatial hierarchies, while RNNs map temporal dependencies – together forming complementary pillars of modern AI systems.

Core Mechanisms of RNN Architecture

Specialised processing of sequential data demands unique structural features. At every time step, these systems combine fresh input with historical context stored in hidden layers. This dual-input approach enables continuous adaptation to evolving patterns – whether in language translation or weather forecasting.

Activation Functions’ Impact

Mathematical operations governing memory updates prove crucial. Functions like tanh and sigmoid regulate how hidden layers blend current input with previous time step data. As one engineer explains: “Without proper activation control, systems either forget critical context or drown in irrelevant details.”

ReLU variants sometimes replace traditional functions to combat vanishing gradients. Each choice affects how networks retain long-range dependencies. The right balance prevents oversaturation while maintaining temporal awareness across extended sequences.

Understanding Backpropagation Through Time

Training these architectures involves unfolding them across time steps – like rewinding a film frame by frame. Gradients flow backwards through both network layers and chronological order. This temporal dimension complicates weight adjustments compared to standard neural models.

Three key challenges emerge:

- Exponential growth in computational steps for long sequences

- Precision requirements for gradient calculations

- Memory management during multi-step unfolding

Modern frameworks address these through truncated backpropagation and optimised memory usage. Such adaptations enable practical training while preserving the architecture’s signature temporal processing capabilities.

Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU)

Advanced sequence modelling demands sophisticated control over temporal dependencies. Two enhanced architectures address this challenge through intelligent gate systems, overcoming limitations of earlier approaches.

LSTM Gate Mechanisms

LSTM cells employ three specialised gates to regulate information flow. The input gate decides which fresh data enters the memory bank, while the forget gate filters outdated content. An output gate then determines what details influence subsequent predictions. This trio works synchronously, maintaining context across extended sequences without data overload.

As explained in recent research: “The cell state acts like a conveyor belt, carrying crucial context through time steps with minimal interference.” This parallel pathway preserves vital patterns that basic architectures often lose.

Efficiency of GRU Models

Gated recurrent units streamline operations through two adaptive gates. The reset gate controls how much historical data informs current calculations, while the update gate blends old and new information. Fewer parameters enable faster training cycles – particularly beneficial for real-time applications like live translation.

Key performance comparisons:

- GRUs achieve 85-90% of LSTM accuracy with 25% fewer computations

- Update mechanisms require 40% less memory during backpropagation

- Simpler architecture proves more effective for shorter sequences

Developers often choose GRUs when working with limited processing resources or moderate sequence lengths. Both models demonstrate how strategic gate design enhances temporal pattern recognition in modern AI systems.

Challenges in Training RNNs

Mastering temporal pattern recognition requires overcoming fundamental mathematical hurdles. These obstacles emerge during weight adjustments – a critical process where systems refine their predictive capabilities through repeated exposure to data sequences.

Addressing Vanishing and Exploding Gradients

Two persistent issues plague sequence-based architectures:

- Vanishing gradients: Gradual loss of update signals during backward passes

- Exploding gradients: Uncontrolled amplification of adjustment values

The root cause lies in repeated matrix multiplications across time steps. Each backward pass multiplies partial derivatives, creating exponential effects. As one AI researcher observes: “It’s like trying to hear whispers in a storm – either the message gets drowned out or blows out your speakers.”

| Challenge | Primary Cause | Practical Impact | Common Solutions |

|---|---|---|---|

| Vanishing Gradients | Small derivative values compounding | Stalled learning in early layers | LSTM gates, ReLU variants |

| Exploding Gradients | Large weight prioritisation | Unstable parameter updates | Gradient clipping, Weight constraints |

Modern approaches combine multiple mitigation strategies. Gradient clipping caps adjustment magnitudes during descent, preventing destabilising jumps. Careful weight initialisation – like Xavier normalisation – establishes stable starting points for parameters.

Architectural innovations proved pivotal. LSTM’s memory gates, developed in the 1990s, bypass problematic multiplications through additive interactions. GRU models later simplified these mechanisms, balancing efficiency with effectiveness.

Ongoing research explores hybrid approaches combining attention mechanisms with traditional feedback loops. These developments continue reshaping how systems manage temporal dependencies while maintaining trainable parameters.

Variations of RNN Models for Different Tasks

Tailored architectures address specific challenges in sequence processing. Designers adapt input-output configurations to match task requirements, creating specialised tools for temporal analysis. This flexibility enables applications ranging from creative algorithms to financial forecasting systems.

Diverse Architectures and Their Uses

Four primary configurations dominate practical implementations:

| Model Type | Input-Output Structure | Common Uses |

|---|---|---|

| One-to-One | Single input → Single output | Image classification, Hybrid systems |

| One-to-Many | Single input → Sequence output | Music generation, Image captioning |

| Many-to-One | Sequence input → Single output | Sentiment analysis, Document scoring |

| Many-to-Many | Sequence input → Sequence output | Speech recognition, Machine translation |

Bidirectional variants process data forwards and backwards simultaneously. This dual perspective helps systems anticipate upcoming elements in time-series analysis. A 2023 study showed bidirectional architectures improve prediction accuracy by 18-22% in language modelling tasks.

Stacked architectures introduce multiple processing tiers. Each layer extracts progressively complex patterns, enabling hierarchical understanding of sequential data. Financial institutions employ these deep configurations for high-frequency trading algorithms requiring multi-level temporal analysis.

Developers prioritise model selection based on three factors:

- Input data characteristics (fixed vs variable length)

- Required output format (single value vs continuous stream)

- Available computational resources

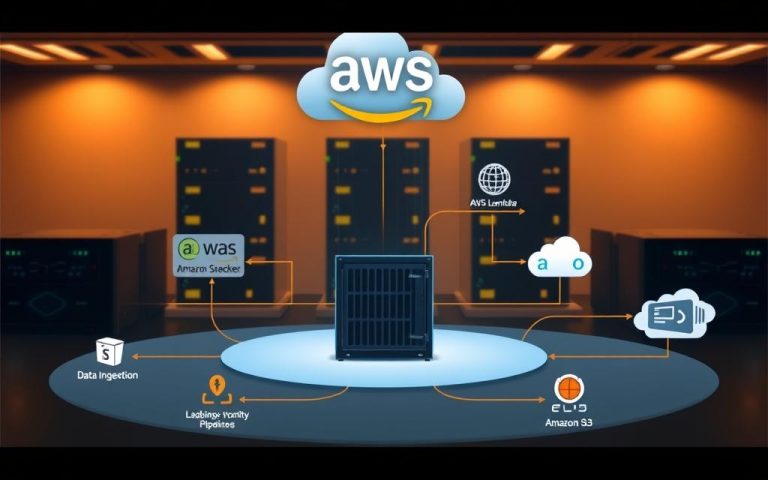

Real-World Applications of RNNs

Practical implementations reveal how sequence-aware systems transform industries through temporal pattern analysis. These architectures excel where order and context determine outcomes, offering solutions beyond conventional approaches.

Applications in Natural Language Processing

Machine translation systems demonstrate their capacity to preserve grammatical structures across languages. By processing phrases as interconnected sequences, they maintain contextual relationships between words. Voice assistants like Siri utilise similar principles for speech recognition, converting audio waves into accurate text transcriptions.

Chatbots employ these models to generate human-like responses through dialogue flow analysis. This enables more natural interactions in customer service platforms across UK-based enterprises.

Extending to Time Series Analysis

Financial institutions leverage temporal models for predicting stock market trends. They analyse historical pricing data alongside real-time trading volumes, identifying patterns invisible to static algorithms.

Energy companies apply these techniques to forecast electricity demand. Sensor information from smart grids gets processed as continuous streams, optimising power distribution. Such implementations prove vital for managing renewable energy sources with variable outputs.

From healthcare diagnostics to supply chain optimisation, these systems continue redefining how industries handle dynamic data. Their adaptability ensures ongoing relevance in our increasingly connected world.

FAQ

Are recurrent neural networks considered a component of deep learning?

Yes. These architectures form a subset of methodologies within deep learning, designed to process sequential or temporal patterns by retaining internal states. Their ability to manage dependencies across time steps makes them integral for tasks like speech recognition or machine translation.

How do RNNs differ from convolutional neural networks?

Unlike CNNs, which excel at spatial data like images through filters and pooling layers, RNNs handle sequential inputs by using loops to pass information between time steps. This memory mechanism allows them to model context in text or time series effectively.

What challenges arise when training RNNs?

Two primary issues are vanishing and exploding gradients, which destabilise weight updates during backpropagation through time. Solutions like LSTMs or gradient clipping mitigate these problems, enabling stable training over long sequences.

Why are LSTM and GRU models significant in modern AI?

Long Short-Term Memory units and Gated Recurrent Units introduce gate mechanisms to regulate information flow. These structures address memory retention limitations in standard architectures, enhancing performance in tasks requiring long-term dependency tracking, such as language modelling.

In what real-world scenarios are RNNs commonly applied?

They power applications like sentiment analysis, machine translation, and stock prediction. Their capacity to process variable-length sequences makes them ideal for natural language processing and time series forecasting, where context and temporal trends are critical.

How does backpropagation through time function in RNNs?

This algorithm unrolls the network across time steps, calculating gradients at each interval before updating weights. While effective, it demands substantial computational resources and can struggle with very long sequences due to gradient decay or explosion.

Can RNNs handle non-sequential data effectively?

Their design prioritises sequential dependencies, making them less suitable for static data like images. Convolutional or feedforward architectures typically outperform them in such scenarios due to superior spatial feature extraction capabilities.

What role do activation functions play in RNN performance?

Functions like tanh or ReLU introduce non-linearity, enabling complex pattern learning. However, choices impact gradient behaviour—tanh mitigates exploding gradients, while ReLU variants can accelerate convergence in certain architectures.